As artificial intelligence (AI) develops and its uses continue to grow, there’s still a discussion to be had around how to use AI in a trustworthy and ethical way.

Businesses need to lead the process, creating an honest, global conversation about the benefits of AI not only for the industry but also governments and society at large, a Sage Group report on building a competitive yet ethical AI economy has concluded.

“The next challenge will be to move the global conversation away from AI as a threat, or replacement for humans, and towards encouraging businesses to approach AI as a complement to human ingenuity,” the report states.

According to the study that Sage conducted with a group of international business executives and U.K.-based government officials, the key is for industry leaders, businesses and C-suite executives to define ethical principles that will guide AI development.

This discussion comes at a time when the global AI market is hitting its stride, the International Data Corporation (IDC) reports industries are aggressively investing in AI and cognitive systems, and that worldwide spending on these systems will increase by 54 per cent from 2017, reaching $19.1 billion this year.

An example that brings to mind a robot future like that of I, Robot or the Terminator movies, is a report from the Fast Company about an AI-powered “psychopath” named Norman, which was created as an experiment to teach people how AI systems can be as good or bad as the people and the data sets that make them. Fast Company’s article states that “bias can be baked in very easily, and very unintentionally.”

Another example is the ProPublica investigation from 2016 that found the AI systems being used by U.S. judges to help predict the likelihood of criminals to re-offend were biased against African Americans.

Consumers will hold companies responsible for how AI is used and operated, states Sage, and that accountability will lie with C-suite executives; the key is to stay ahead and create ethical practices and tests that go beyond just the creation of technology but also continually evaluate risks and biases in AI machinery.

The British company’s report states that there is a need for businesses and governments to work together to help create a future where interactions with technology are positive and actually help people and improve work, all framed in an environment of understanding and trust. Implementing an ethical framework for your company is a great place to start, a way to create building blocks for the future of AI technology, it finds.

And the Canadian government is starting to recognize that need to address these issues and implement plans in order to keep up with the ever-changing world of AI.

The federal government, as ITBusiness.ca sister site IT World Canada has previously reported, has provided funding to groups such as the Vector Institute for Artificial Intelligence and the Alberta Machine Intelligence Institution in order to help develop a pan-Canadian AI strategy.

The Government of Canada’s chief intelligence officer (CIO), Alex Benay, has also been a leader in the conversation around the need for ethical AI practices, and argues that the government is currently lacking much-needed regulation.

https://twitter.com/AlexBenay/status/983869169438416901

Benay is also the co-founder of the CIO Strategy Council, a not-for-profit that brings together public and private sector CIOs to discuss digital issues and help set industry standards. In a recent release the Council called itself an emerging leader “committed to spearheading the development of the first-ever globally-recognized standards for the ethical use of both AI and big data.”

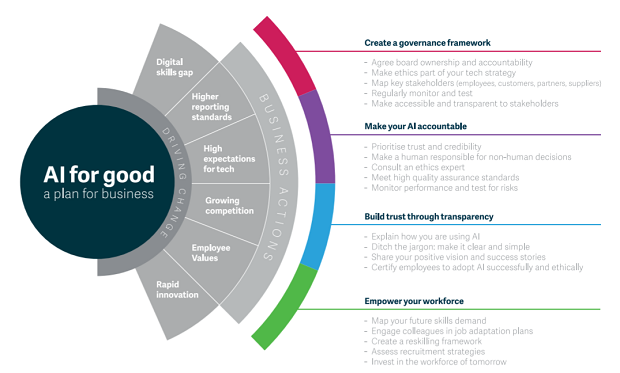

The Sage report offers its own idea of what a framework for building ethical AI practices for business and governments would look like. It suggests creating a government framework, ensuring that AI is held accountable, building trust through transparency, and empowering the workforce.

When it comes to the economy and using these types of technology, the Sage report argues that achieving ethical AI is core to having successful business models and decision making, and that offering education both internally and to society as a whole is necessary.

“People need to understand how AI works, why it provides the best solution in a given context and why they should welcome assistance from an AI powered platform,” states the report.

Scenarios like the U.S. courts and “Norman” AI that can damage the public’s trust of AI are exactly what parties like Sage, Benay, and CIO Strategy Council are trying to fight by promoting a proactive approach led by leaders in the industry, with support from governments.